In a recent development that underscores the growing confluence of technology and human rights, a group of Holocaust survivors has turned to legal avenues to oppose Meta Platforms Inc.’s handling of antisemitic content on its Facebook platform. The case, spearheaded by 15 Holocaust survivors, raises crucial questions about social media’s role in propagating hate speech and the responsibilities of tech companies to curb such activities.

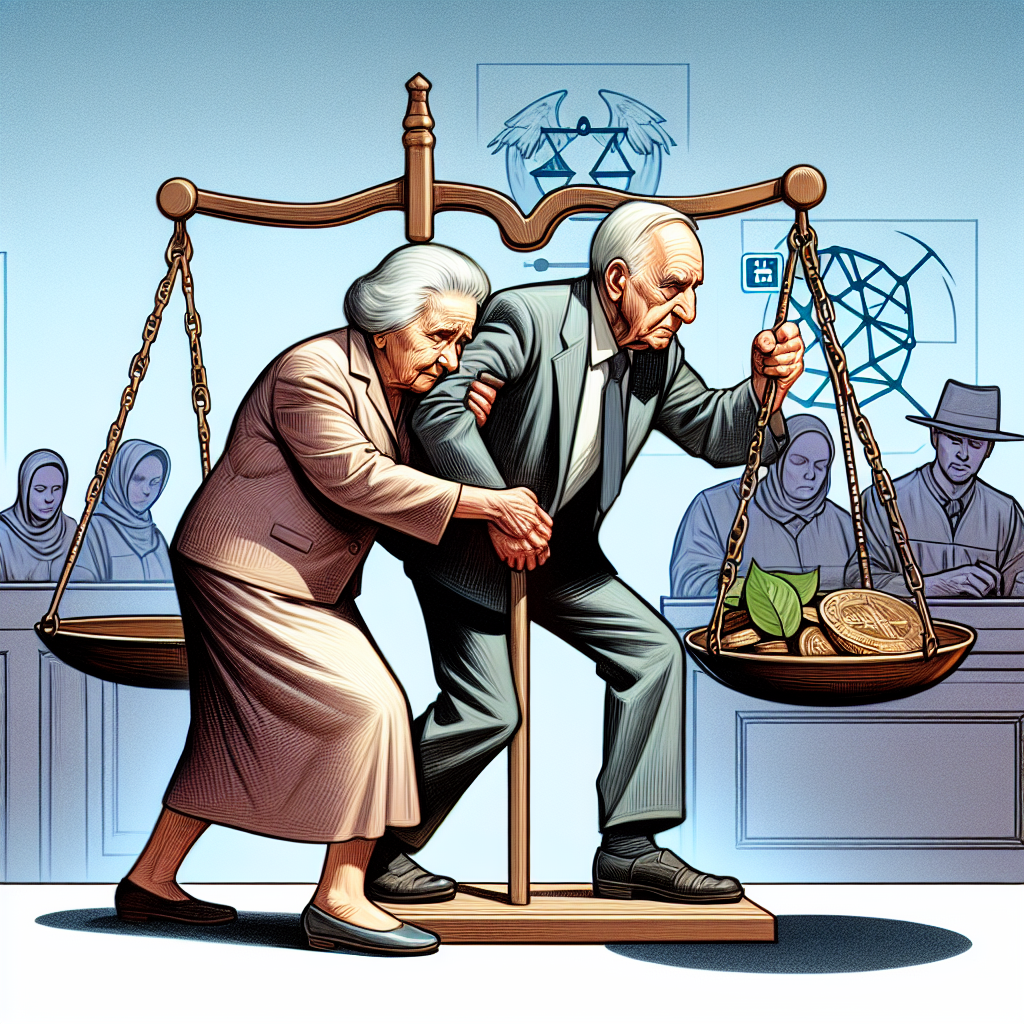

The lawsuit, launched in Israel, aims to secure an injunction against Facebook for allegedly not doing enough to remove antisemitic content. According to the survivors, the platform has systematically failed to enforce its own policies that prevent hate speech, allowing antisemitic content to proliferate, thus ignoring the rights and safety of Jewish users. The survivors request that Facebook be compelled by the court to strike down posts denying or distorting the Holocaust and to actively monitor and rectify any shortcomings in their antisemitism moderation.

The Holocaust, which resulted in the deaths of six million Jews during World War II, represents one of the darkest chapters in human history, and its denial has long been a tactic used by antisemitic groups to propagate hate and disinformation. The issue gained renewed attention in October 2020, when Facebook amended its hate speech policy to ban content that denies or distorts the Holocaust, marking a significant policy shift after years of criticism for allowing such content to spread. According to Meta’s current community standards, the company explicitly prohibits hate speech—defined as a direct attack on people based on what it refers to as “protected characteristics”—and maintains that it takes steps to enforce these rules using both technology and human review.

Despite these declared policies, the plaintiffs argue that enforcement is lackluster and inadequate, suggesting that many reports of offensive posts are met with responses indicating that the content does not violate Facebook’s guidelines. This, according to survivors, not only invalidates their trauma but also contributes to a wider environment of bigotry that could incite further discrimination or violence.

Legal experts point to the complexity of such cases, noting that they hinge on balancing the need for free expression with the imperative to protect individuals from hate speech. The challenge is particularly acute in online environments where speech can proliferate rapidly and reach global audiences with unprecedented ease. Social media companies like Meta are thus under increasing scrutiny to ensure they can effectively moderate content without impinging on freedom of expression.

This legal action is emblematic of the broader pressures facing social media giants globally as they navigate the choppy waters of content moderation. Governments and civil societies are increasingly demanding more accountability and transparency in how these companies manage content that can foster hate and disinformation.

As this case progresses, it will likely serve as a bellwether for how the tensions between tech, ethics, and freedom of expression are managed, not only in Israel but globally. The outcome could potentially influence policy decisions and operational practices across the tech industry, reflecting a growing recognition of the powerful role social media plays in shaping societal norms and values.

Meta has not publicly responded to the specifics of the lawsuit but has previously stated its commitment to combatting hate speech across its platforms. How the company navigates this legal challenge could have far-reaching implications for its global user base and for the broader dialogue about the responsibilities of social media networks to foster safe and inclusive online environments.