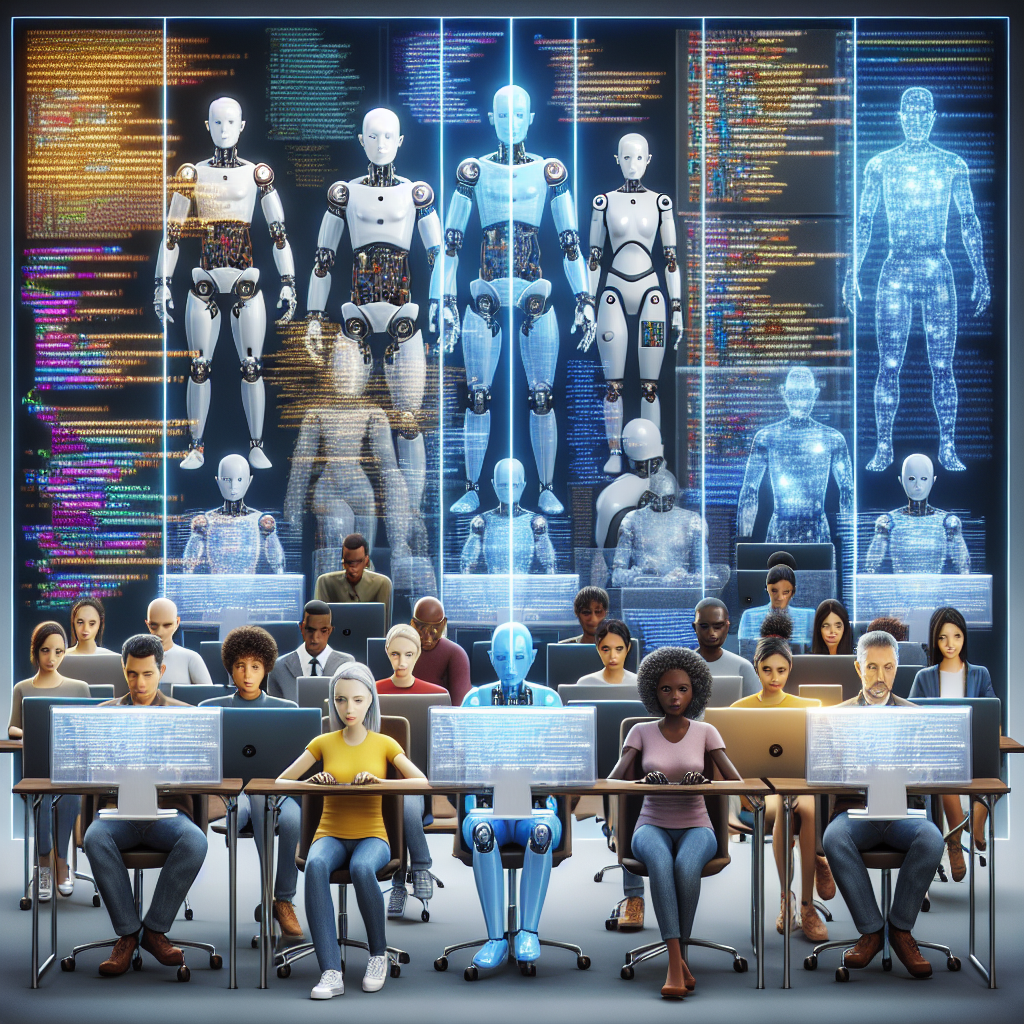

As artificial intelligence continues to redefine the software development landscape, interest is intensifying around the use of AI coding agents—autonomous or semi-autonomous systems designed to generate, debug, and even refactor source code. According to the recent article “How AI Coding Agents Work — And What to Remember If You Use Them,” published by StartupNews.fyi on December 24, 2025, these digital assistants are rapidly evolving from rudimentary tools into sophisticated collaborators capable of transforming the development process.

Built atop large language models (LLMs) trained on vast repositories of code, technical documentation, and natural language inputs, AI coding agents operate by interpreting prompts and executing tasks that have traditionally required the involvement of skilled human engineers. Unlike conventional autocomplete tools or basic integrated development environment (IDE) add-ons, these agents can comprehend higher-level objectives, interact with codebases, and adapt based on feedback loops. While many leading tech firms have customized their own proprietary systems, open-source platforms and APIs are also enabling small teams and independent developers to experiment with these technologies.

Citing the article, the primary draw of such agents lies in their ability to automate routine tasks—such as writing boilerplate code, conducting code reviews, or identifying deprecated libraries—thereby freeing up human developers for higher-level design and problem-solving efforts. Beyond simple automation, some of the more advanced agents can interact with development environments in real-time, connect with version control systems, and even simulate user behavior to test application flows.

However, as noted by StartupNews.fyi, deploying these agents effectively requires thoughtful integration and a clear understanding of their limitations. Task formulation is critical; vague prompts often yield suboptimal or incorrect results. Effective usage depends heavily on precise input, iterative refinement, and human oversight. Moreover, there are concerns surrounding data security, licensing compliance, and the potential for model hallucination—situations in which the AI generates code that appears plausible but is in fact erroneous or insecure.

The article also emphasizes the risk of developers becoming overly reliant on AI-generated output without verifying correctness or compliance with company standards. While AI agents may deliver time savings, they are not infallible, and unvetted code can introduce vulnerabilities or technical debt. Legal implications further complicate the picture, especially when agents trained on public repositories generate code that might inadvertently replicate proprietary or licensed material.

Corporate adoption is on the rise, particularly among startups and mid-sized firms looking to optimize lean engineering teams. Yet large enterprises are also experimenting with on-premise deployments of AI coding tools for internal projects to protect intellectual property and maintain regulatory control. Some observers already anticipate that AI agents could become a standard part of the development toolchain, augmenting workflows in much the same way as version control or automated testing tools have become ubiquitous.

As efforts continue to refine these systems, industry leaders and regulators alike are deliberating on frameworks to ensure responsible use. Transparency in training data, output traceability, and accountability mechanisms are among the proposals gaining traction within the developer community.

In sum, while AI coding agents offer compelling efficiencies and the promise of enhanced productivity, they demand careful management. As the article from StartupNews.fyi makes clear, these tools are best approached not as autonomous engineers but as powerful assistants—dependent on thoughtful direction, continuous monitoring, and professional scrutiny. The future of coding may indeed be written in collaboration with AI, but the responsibility for correctness and innovation ultimately remains human.